— Our Blog —

Teaching English Proficiency Test Preparation

Prepare students for success on English proficiency exams with strategies and insights for test prep. Whether teaching preparation for the IELTS, TOEFL, PTE, or other exams, discover best practices for each test section, ways to boost student confidence, and resources to structure effective study sessions. From scoring tips to test-taking strategies, these articles cover all aspects of guiding learners to achieve their desired scores.

-

8 Tips for Teaching English for Test Preparation

By Krzl Light Nuñes

With the high demand and increase in earning potential, it’s no surprise that preparing students for the IELTS, TOEFL, PTE, and other English proficiency tests is a popular niche among...

-

Using AI Tools for English Language Proficiency Placement, Leveling, and Progress Monitoring

By Rashmi

Artificial Intelligence (AI) seems to be everywhere these days. Many industries are incorporating it into their day-to-day operations, and English Language Training (ELT) is no exception. AI tools for assessment... -

Tech-Driven Solutions: Learn About Global Language Assessment Provider Bright Language

By Rashmi

A pioneer in language assessment, Bright Language has been a preferred language proficiency assessment provider to organizations around the world for more than 30 years. An early adopter of advancing...

-

How Can I Start Teaching IELTS Exam Prep?

By Krzl Light Nuñes

Looking to stand out as an English teacher? Expand your career prospects and earning potential by carving out a teaching niche, such as preparing students to take the world's most... -

A Guide to Teaching TOEFL Test Prep: What It Entails and How to Get Started

By Rashmi

The Test of English as a Foreign Language (TOEFL) is a standardized test used for admissions and placements around the world. Teaching TOEFL test prep is a great niche for...

-

Leveraging English Proficiency Testing in Academics and Employment

By Linda D'Argenio

English language testing constitutes a significant part of the English teaching industry. Every year, millions of students take various tests to demonstrate their proficiency in English. To put this in... -

PTE: A Testing Success Story

By Rashmi

The Pearson Test of English (PTE) launched worldwide in 2009. Since then, it has become a leading exam for study applications worldwide, and for visa applications for work and migration...

-

Mastering the Art of Teaching PTE Test Prep: Expert Advice From Bridge Alumni

By Krzl Light Nuñes

Are you thinking of building or broadening your TEFL niche by becoming a Pearson Test of English (PTE) trainer? If so, you may also wonder how other English teachers have... -

Teaching PTE Test Prep: 8 Resources to Guide Your English Language Classes

By Bridge

This guest article was written by Pearson, a leading global publisher of English language curricula and resources, courseware, and assessments. The Pearson Test of English, or PTE, is quickly becoming... -

The Ultimate Guide to Teaching PTE Test Prep

By Savannah Potter

The Pearson Test of English, or PTE, is a revolutionary English proficiency test legally recognized by more than 70 nations worldwide. If you’re an English teacher looking to specialize in... -

Big Business: High-Stakes English Tests Drive ELT Test Prep Solutions

By Jennifer Maguire

Denver, CO. – As the remote delivery of English language tests becomes more commonplace, resources to prepare test takers have evolved as well. Gone are the days of visiting a... -

Online English Language Testing – Increased Access Comes With Security Challenges

By Jennifer Maguire

Denver, Co. – English language testing has long been used for a variety of purposes, such as hiring and promotions, college and university admissions and immigration. The shift to online... -

Key Takeaways From the BridgeUniverse English Language Testing Summit

By Rashmi

BridgeUniverse successfully hosted its second Summit with the participation of various leaders in the English Language Testing field. The Virtual Summit on English Language Testing: High Stakes for Learners &... -

English Testing at Home: Convenient and Safe, but Is It Accessible (and Acceptable) to All?

By Michele DeBella

When established, test-development leaders discuss their online English proficiency tests, similar themes emerge. Online tests, most of which can be taken at home, have a wider reach than computer-based tests... -

English Test Providers Jockey for Position as Pandemic Accelerates Online Trend

By Nick Thomas

Luis von Ahn, the co-founder and CEO of Duolingo, had to travel to the neighboring country of El Salvador to sit his English language proficiency exam for a U.S. college... -

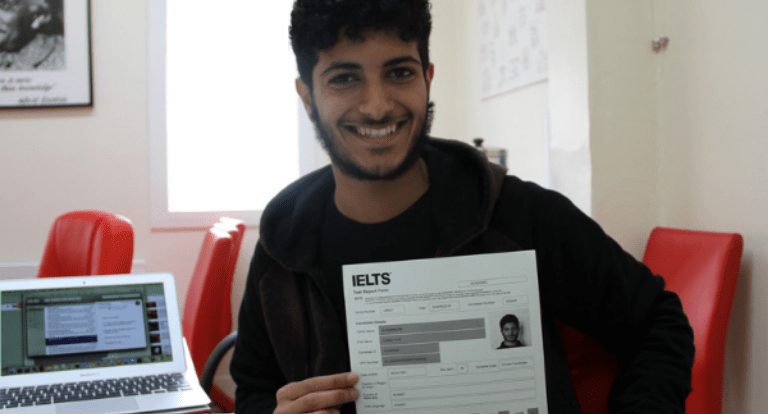

Nasim, on Specializing in IELTS Exam Prep as a Teacher in Azerbaijan

By Krzl Light Nuñes

Have you ever wondered what it's like to teach a specialized TEFL/TESOL niche, such as exam prep? We interviewed Nasim, who teaches at a language school in Azerbaijan, about the...